Best Practices in Generative AI Used in the Creation of Accessible Alternative Formats for People with Disabilities

Why It's Important: For math and science to be accessible non-visually, equations in digital or print content must be translated into precise, meaningful non-visual formats. Without this translation, the combination of graphical symbols and text in equations and formulas creates syntax issues that prevent screen readers, which read from left to right, from accurately delivering the information. Creating accessible STEM documents typically requires labor-intensive alt-text generation by a specialist familiar with the concepts, symbols, and syntax (i.e., Alt-media Specialist). Meanwhile, using optical character recognition to translate complex notation often produces results that lack the contextual or symbolic understanding needed for accurate translation.

Our Approach: This project benchmarks existing Generative AI tools for translating STEM equations and formulas, aiming to improve efficiency and accuracy for alt-media specialists and enhance STEM content accessibility for blind or low-vision students. We develop evaluation metrics based on contemporary research and user-centered findings and assess the feasibility of integrating these Generative AI tools into workflows at the Center for Inclusive Design and Innovation (CIDI) at Georgia Tech. CIDI supports accessible media at a national and international scale, including resources for post-secondary students with disabilities, the U.S. National Library Service, and the United Nations. This project advances Georgia Tech’s commitment to cutting-edge Generative AI research while ensuring equitable STEM access for students with disabilities nationwide.

Our Approach: This project benchmarks existing Generative AI tools for translating STEM equations and formulas, aiming to improve efficiency and accuracy for alt-media specialists and enhance STEM content accessibility for blind or low-vision students. We develop evaluation metrics based on contemporary research and user-centered findings and assess the feasibility of integrating these Generative AI tools into workflows at the Center for Inclusive Design and Innovation (CIDI) at Georgia Tech. CIDI supports accessible media at a national and international scale, including resources for post-secondary students with disabilities, the U.S. National Library Service, and the United Nations. This project advances Georgia Tech’s commitment to cutting-edge Generative AI research while ensuring equitable STEM access for students with disabilities nationwide.

Generative AI for Greco-Roman Architectural Reconstruction: From Partial Unstructured Archaeological Descriptions to Structured Architectural Plans

Why It's Important: Among the archaeological data generated from excavations, building remains are of the largest scale and the most complicated to understand and reconstruct. They include fragments of foundations, walls, and architectural details such as columns and beams. The mission of archaeology is to document their state of preservation in drawings, text and numerical measurements, reconstruct their initial form, and interpret them to understand the history, the societal and cultural values, and their evolution over time. The challenge is that data is always partial and fragmentary to various degrees. Archaeologists must identify the known (invariable) and the missing (variable) parts of the building. To reconstruct a building, they must look at the whole archaeological record and identify other parallel examples based on geography, chronology, typology, or patronage from which they can infer the variable parts. This process is very laborious and never conclusive as more than one hypothesis is likely, and at the same time, must be transparent about the distinction between evidence and hypothesis. In theory, Generative AI can tremendously simplify this process as it can generate multiple structured depictions ±hypotheses ± to fill in the gaps based on the input.

Our Approach: The long-term goal of our proposed direction of study is to build computational AI tools that will aid archaeologists, historians, and other scholars in analyzing a plethora of architectural records at scale simultaneously by reasoning about structured hypothetical plans based on the free form partial descriptions from disparate sources. As a first take, for the 12-month period of the project, we propose to investigate systematically initial approaches for leveraging generative AI to generate consistent hypothetical structured plans for buildings given their partial unstructured archaeological description.

Our Approach: The long-term goal of our proposed direction of study is to build computational AI tools that will aid archaeologists, historians, and other scholars in analyzing a plethora of architectural records at scale simultaneously by reasoning about structured hypothetical plans based on the free form partial descriptions from disparate sources. As a first take, for the 12-month period of the project, we propose to investigate systematically initial approaches for leveraging generative AI to generate consistent hypothetical structured plans for buildings given their partial unstructured archaeological description.

- Dataset creation: Data collection from literature review of thousands of Greek and Roman buildings of different typology, scale and from different periods and regions, which will become the input to our AI methods. This will be the first such dataset for computational analysis of ancient architecture.

- Symbolic representation of visual plans: Because these plans are structured compositions of small architectural components, we will build upon prior work on shape grammars [5, 1, 2, 3, 4] and develop prototypical grammars for the orders for different types of buildings. This is not only useful for interpretation and analysis, but also crucial for the development, and evaluation of the AI methods that will generate these symbolic plans.

- Evaluation of AI output: Develop protocols, annotation strategies, and evaluation methodology.

- Development of AI techniques: We will a) investigate in-context learning methods for converting

descriptions into symbolic representations of plans via LLMs, b) develop structured inference training techniques to produce consistent plans and reduce hallucinations from LLMs, and c) tackle long-form

archaeological descriptions and semi-structured tabular archaeological data using retrieval and context compression techniques. Expert human interaction with AI output: Develop AI techniques that also produce attribution to data and justification in addition to plan hypotheses for expert verification. We also aim to develop protocols and methods to collect and use expert feedback on AI output to improve performance.

Georgia Tech Microsoft CloudHub Partnership Explores Electric Vehicle Adoption

With new vehicle models being developed by major brands and a growing supply chain, the electric vehicle (EV) revolution seems well underway. But, as consumer purchases of EVs have slowed, car makers have backtracked on planned EV manufacturing investments. A major roadblock to wider EV adoption remains the lack of a fully realized charging infrastructure. At just under 51,000 public charging stations nationwide, and sizeable gaps between urban and rural areas, this inconsistency is a major driver of buyer hesitance.

How do we understand, at a large scale, ways to make it easier for consumers to have confidence in public infrastructure? That is a major issue holding back electrification for many consumer segments.

- Omar Asensio, Associate Professor at Georgia Institute of Technology and Climate Fellow, Harvard Business School | Director, Data Science & Policy Lab

Omar Asensio, associate professor in the School of Public Policy and director of the Data Science and Policy Lab at the Georgia Institute of Technology, and his team have been working to solve this trust issue using the Microsoft CloudHub partnership resources. Asensio is also currently a visiting fellow with the Institute for the Study of Business in Global Society at the Harvard Business School.

The CloudHub partnership gave the Asensio team access to Microsoft’s Azure OpenAI to sift through vast amounts of data collected from different sources to identify relevant connections. Asensio’s team needed to know if AI could understand purchaser sentiment as negative within a population with an internal lingo outside of the general consumer population. Early results yielded little. The team then used specific example data collected from EV enthusiasts to train the AI for a sentiment classification accuracy that now exceeds that of human experts and data parsed from government-funded surveys.

The use of trained AI promises to expedite industry response to consumer sentiment at a much lower cost than previously possible. “What we’re doing with Azure is a lot more scalable,” Asensio said. “We hit a button, and within five to 10 minutes, we had classified all the U.S. data. Then I had my students look at performance in Europe, with urban and non-urban areas. Most recently, we aggregated evidence of stations across East and Southeast Asia, and we used machine learning to translate the data in 72 detected languages.”

We are excited to see how access to compute and AI models is accelerating research and having an impact on important societal issues. Omar's research sheds new light on the gaps in electric vehicle infrastructure and AI enables them to effectively scale their analysis not only in the U.S. but globally.

- Elizabeth Bruce, Director, Technology for Fundamental Rights, Microsoft

Asensio's pioneering work illustrates the interdisciplinary nature of today’s research environment, from machine learning models predicting problems to assisting in improving EV infrastructure. The team is planning on applying the technique to datasets next, to address equity concerns and reduce the number of “charging deserts.” The findings could lead to the creation of policies that help in the adoption of EVs in infrastructure-lacking regions for a true automotive electrification revolution and long-term environmental sustainability in the U.S.

- Christa M. Ernst

Source Paper: Reliability of electric vehicle charging infrastructure: A cross-lingual deep learning approach - ScienceDirect

Modeling the Dispersal and Connectivity of Marine Larvae with GenAI Agents

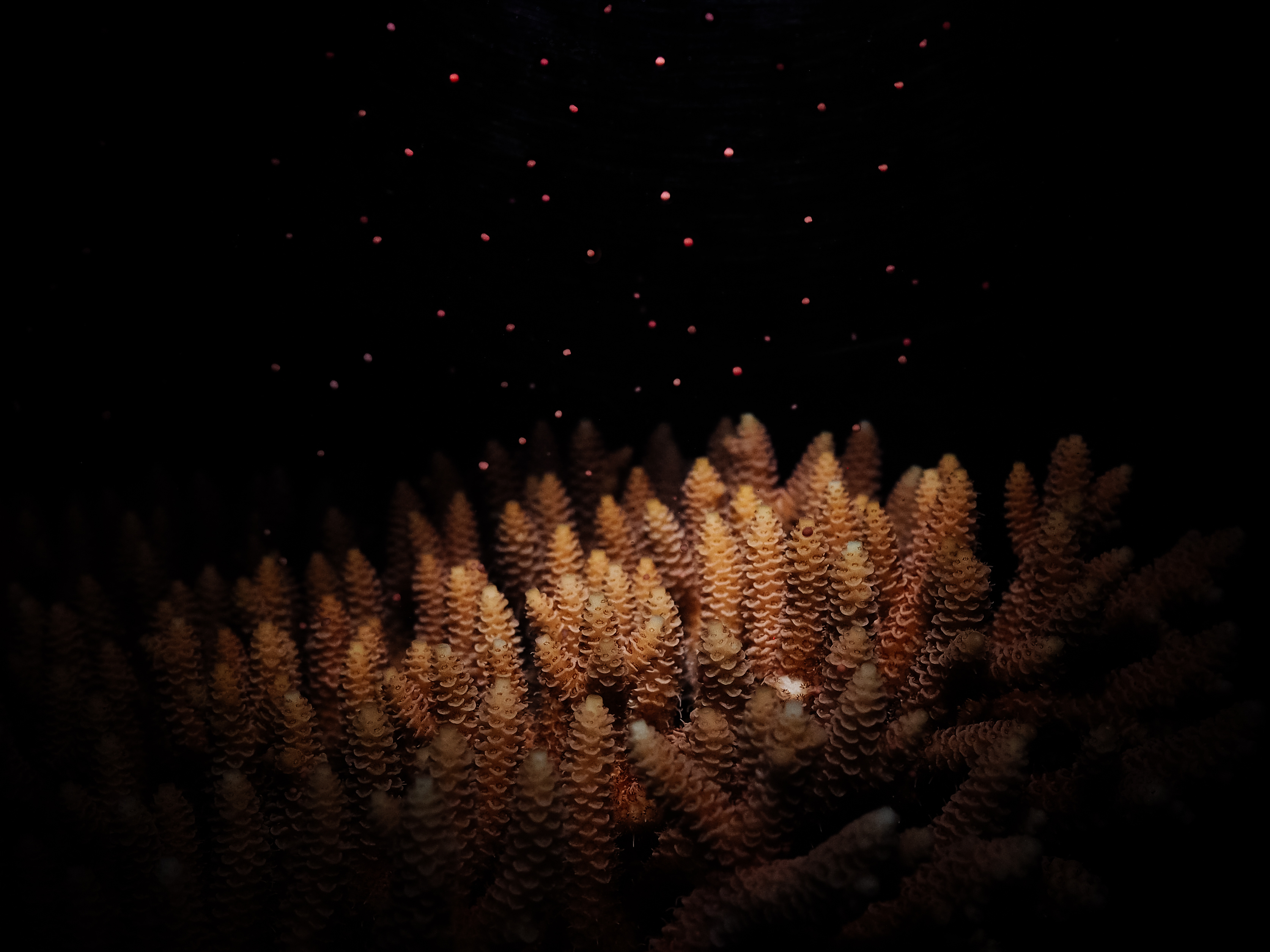

Why It's Important: Larval dispersal and settlement is a critical phase in the life cycle of many marine species, including corals, significantly impacting their survival, distribution, and population dynamics. Understanding these early life stage movements is essential for conserving marine biodiversity and managing seafood stocks. According to the International Union for Conservation of Nature, over 8% of the 17903 marine species assessed are at risk of extinction. Most importantly, warm-water coral reefs are extremely vulnerable to warming. They are estimated to support a quarter to one third of marine biodiversity, including over 25% of marine fish species, and provide nearly US$9.8 trillion worth of ecosystem services each year. An estimated 50% of global live coral cover has been lost over the last 50 years, primarily due to ocean warming. Knowledge of larval migration can play a pivotal role in the conservation of these species.

Our Approach: Our project aims to achieve the following concrete goals. (1) Simulate a single larva using a generative AI agent. The agent can perceive environmental information and take actions similar to those of larvae. (2) Simulate a group of larvae with a network of communicative agents. The agents are able to communicate like larvae and make collective decisions. (3) Apply our framework to three specific cases to investigate the larval dispersal trajectory and settlement region to identify nursery habitats for Red Snapper and Vermilion Snapper in the Gulf of Mexico, and for Acropora corals, found in the Florida Keys, Bahamas, Caribbean and the western portion of the Gulf of Mexico. This approach offers, for the first time, the possibility to simulate the emergence of collective intelligence for larvae groups.

Accelerating Materials Development Through Generative AI Based Dimensionality Expansion Techniques

PI: Surya Kaladindi, Regents Professor, and Rae S. and Frank H. Neely Chair Professor in the Woodruff School of Mechanical Engineering, Georgia Tech

Co-PI: Peng Chen, Assistant Professor, School of Computational Science and Engineering, Georgia Tech

GRA Lead: Michael Buzzy, Graduate Research Assistant, Computational Science and Engineering, Georgia Tech

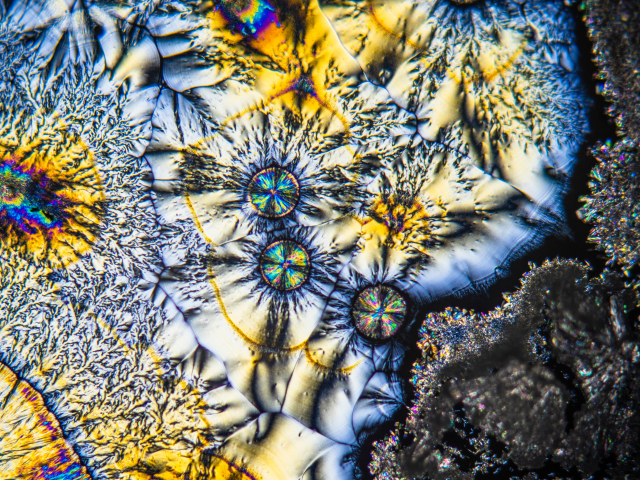

Why It's Important: The development of materials with superior properties has been a longstanding key enabler of technological advancement and a core pillar of scientific research, driving innovation across various industries and pushing the boundaries of what is possible in engineering and technology. However, the limitations of current microscopy techniques present a critical obstacle, as 3D microscopy—while essential for in-depth studies of material structures—is prohibitively expensive and time-consuming. In contrast, 2D microscopy is much more affordable and accessible, but it falls short in capturing the full complexity of a material's internal structure. The ability to construct 3D material structures from 2D microscopy images in a cost-effective manner would represent a transformative advancement in materials science, enabling faster and more efficient development of advanced materials.

Our Approach: Our approach has two primary objectives. The first goal is to develop a generative model that captures the statistical distribution of 3D microstructural features in polycrystalline materials. These features, which include the spatial arrangement of grains and crystallites, significantly influence the macroscopic properties of materials. The generative model will be constructed using existing 3D experimental data, leveraging advanced machine learning techniques, particularly Denoising Diffusion Models, to learn the complex joint probabilities of the 3D microstructures.

Our Approach: Our approach has two primary objectives. The first goal is to develop a generative model that captures the statistical distribution of 3D microstructural features in polycrystalline materials. These features, which include the spatial arrangement of grains and crystallites, significantly influence the macroscopic properties of materials. The generative model will be constructed using existing 3D experimental data, leveraging advanced machine learning techniques, particularly Denoising Diffusion Models, to learn the complex joint probabilities of the 3D microstructures.

The second objective involves constructing 3D material structures that are statistically consistent with new 2D microscopy slices obtained from material samples. This ill-posed problem will be tackled using a Bayesian inversion approach, which will leverage the generative prior to regularize the solution space. The innovative application of Plug and Play MCMC (PnP MCMC) algorithms will allow for modular conditioning of generative models, facilitating the integration of datasets with different dimensionalities and enabling the generation of 3D structures from 2D data.

The research is expected to have a transformative impact on the design, development, and deployment of new and improved materials in advanced technologies. By enabling the accurate and efficient reconstruction of 3D material structures from 2D images, the proposed work will significantly enhance the understanding of PSP linkages and accelerate the development of materials with superior properties.

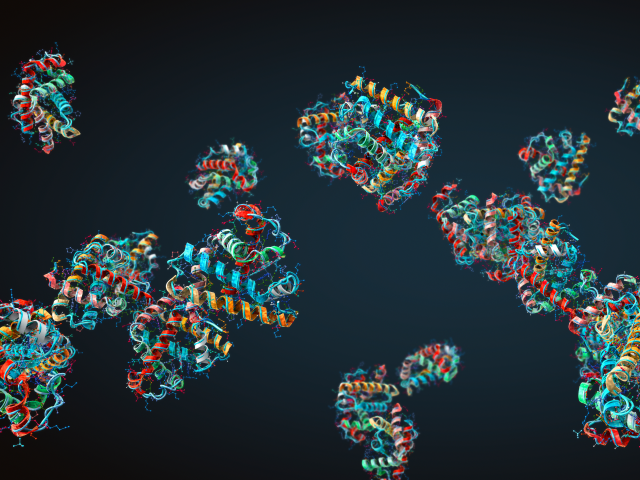

Designing New and Diverse Proteins with Generative AI

Why It's Important: Proteins are the most complex molecules in nature and mediate various processes essential to life. While natural proteins perform vital biological functions, their native functionalities are often sub-optimal for practical applications. Designing new proteins with desired functions has the potential to extend the function repository of proteins that nature has so far evolved.

Our Approach: This project aims to develop new generative artificial intelligence (GenAI) and explore its new opportunities for accelerating scientific discovery in biology, particularly designing proteins for important applications such as therapeutics, clean energy, and sustainability. Leveraging the recent progress in GenAI, such as large language models (LLMs), we will develop a protein-focused foundation model based on LLMs of protein sequences, incorporating experimental data of protein functions to steer the LLM in favor of generating functional proteins.

Applying Generative AI for STEM Education

PI: Noura Howell, Assistant Professor, Digital Media & Interactive Computing, Georgia Tech

Co-PI: Michael Nitsche, Professor, Digital Media, Georgia Tech

Community Partner: Kalia Morrison, Executive Director, Decatur Makers

Researcher: Supratim Pait, PhD Student, Digital Media, Georgia Tech

Why It's Important: Generative AI has sparked controversy in educational contexts, raising ethical concerns. There is a need to responsibly, ethically unlock generative AI's transformative potential for education. Meanwhile, marginalized communities are both disproportionately impacted by harmful biases in AI systems, and have less access to STEM education whereby they could innovatively address these harms. There is a need to empower the next generation of diverse AI innovators who can reimagine and rebuild AI to support diverse, local communities. This is the goal of our project.

Our Approach: This proposal will develop educational approaches with generative AI to support marginalized youth in learning AI literacy and AI ethics, with our community partner Decatur Makers. We will host workshops for children aged 10-12, a key development stage for identity formation and career aspirations. Our approach combines generative AI in education, AI literacy, AI ethics, and design futuring. Design futuring helps center children’s agency and authorship. Design futuring imagines future speculative technologies as a means of critically considering ethics of technology.

Our Approach: This proposal will develop educational approaches with generative AI to support marginalized youth in learning AI literacy and AI ethics, with our community partner Decatur Makers. We will host workshops for children aged 10-12, a key development stage for identity formation and career aspirations. Our approach combines generative AI in education, AI literacy, AI ethics, and design futuring. Design futuring helps center children’s agency and authorship. Design futuring imagines future speculative technologies as a means of critically considering ethics of technology.

We will develop educational tools and conduct a series of workshops. Children will learn fundamentals of how generative AI works, discuss ethics of real-world generative AI systems, and imagine alternative futures for how generative AI can support their communities. To express their imagined futures, children will create characters, scenes, and stories using generative AI. We will integrate established performance and storytelling techniques known to increase cultural relevance and empowerment of educational content. Through this, students leverage generative AI while amplifying their individual creative agency. We will also engage K12 teachers for feedback and sharing lesson plans for broader educational impact.

Image Credit: Decatur Makerspace

Intelligent LLM Agents for Materials Design and Automated Experimentation

Why It's Important: Rapidly and efficiently identifying new materials with desired physical and functional properties is a longstanding challenge with significant scientific and economic implications. Evaluating vast spaces of possible materials can be an intractable problem both experimentally in the lab, and computationally via ab-initio simulations. Generative AI and Large Language Models (LLMs) provide a promising avenue to address these current challenges in materials discovery by significantly improving the efficiency and success rate in materials discovery. In particular, LLMs have shown exceptional capability for reasoning, self-reflection, and decision-making in a range of different domains, including in the physical sciences.

Our Approach: We seek to leverage these capabilities of LLMs, namely, 1) their ability to interpret human instructions and guidance, 2) their ability to reason and make decisions, and 3) their ability to internalize scientific knowledge, to create an agent which can intelligently design new materials with human-like capabilities. In this project we will develop and systematically benchmark, in silico, an LLM-driven framework for automated computational and experimental materials design with natural language-guided behavior and advanced reasoning capabilities. This approach represents a paradigm shift from existing materials design approaches based on high-throughput screening or traditional optimization methods using Bayesian methods or genetic algorithms, with the potential to enable and accelerate the design of novel materials with transformative properties.

Adaptive and Robust Alignment of LLMs with Complex Rewards

Why It's Important: Large Language Models (LLMs) like ChatGPT have shown remarkable abilities in various tasks, but ensuring they align with human values remains challenging. Our research aims to develop innovative methods for better aligning LLMs with human intent, making them more helpful, safer, and trustworthy across diverse applications.

Current alignment methods often struggle to capture the nuanced, context-dependent nature of human preferences and face challenges with uncertain or biased feedback. Our project addresses these limitations through multi-objective reward modeling, uncertainty-driven reward calibration, and robust handling of potentially corrupted feedback.

Our Approach: We're introducing several key innovations: a context-aware system that dynamically balances multiple objectives, an adaptive approach to model preference strengths in human feedback, and techniques to identify and mitigate the impact of inconsistent guidance. These methods are designed to enhance existing LLM training approaches, improving their ability to align with human values.

By improving LLM alignment, our research could enable more tailored AI assistance, guide better code generation, help combat misinformation, and adapt LLMs for specialized scientific fields. Ultimately, we aim to make LLMs more trustworthy, interpretable, and adaptable, fostering their responsible deployment across a wide range of applications.