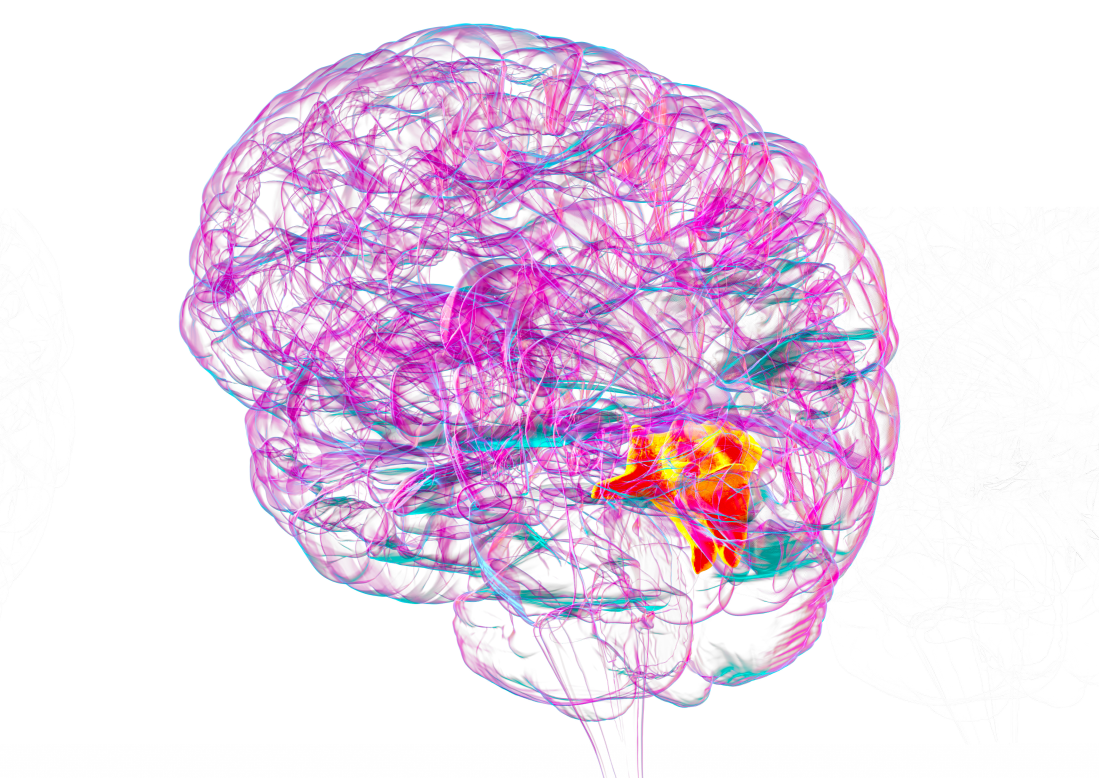

Why It's Important: The primate higher visual cortex, particularly inferior temporal (IT) cortex, supports recognition by encoding high-level visual semantics such as object category, pose, and identity. Decoding analyses can indicate that information is present, but they often fail to reveal how that information is organized across population activity. Mixed selectivity further complicates interpretation by blending multiple factors within neuron responses. For both neuroscience and AI, we need models that expose interpretable, biologically plausible subspaces that carry distinct semantic factors, along with tools that make those factors human-visible rather than remaining abstract coordinates. Achieving this would clarify how semantics are factorized across neural populations, enable principled comparisons to artificial networks, and guide the design of vision systems that are robust, transparent, and better aligned with human understanding.

Our Approach: We develop a generative analysis framework that couples group-structured latent variable modeling of neural activity with diffusion-based visual probing. First, we fit a compact variational model in which the latent space is partitioned into groups intended to capture separable semantic dimensions. Light supervision can anchor some groups to experimental factors such as in-plane rotation, while allowing additional groups to be discovered directly from neural data. This design favors subspacelevel interpretability and mitigates the opacity of a single monolithic code.

Second, we introduce diffusion-guided probing that turns latent groups into concrete visual explanations. After learning the neural latent groups, a controllable diffusion image generator synthesizes photorealistic stimuli predicted to maximally vary a chosen neural group while keeping other factors relatively stable. The resulting images act like “concept renderings” for the neural code, enabling investigators to see whether a group aligns with pose, category structure, identity, or other highlevel features. Because the generator is decoupled from the neural model, we retain experimental control, reduce overfitting risks, and can test invariances by targeted manipulations of synthesized stimuli.

Third, we evaluate the framework on IT recordings from object recognition paradigms. Predictive validity is assessed by held-out neural likelihood and decoding accuracy for controlled variables. Disentanglement and subspace specificity are quantified by adaptations of standard metrics to neural data and by measuring the stability of each group under orthogonal manipulations. Interpretability is assessed with blinded human ratings and with task-driven tests that verify whether diffusion probes selectively modulate the expected neural dimensions. Together, these analyses link latent structure to visual semantics in a way that is both statistically sound and directly interpretable.

Expected Outcomes & Impact: Scientifically, the project will provide a clear account of how high-level semantics can be organized across neural subspaces in IT, offering new traction on mixed selectivity and the geometry of population codes. For AI, the work delivers actionable design principles for inducing explicit, human-interpretable factors in vision models—improving robustness, controllability, and transparency. Community deliverables will include open-source code for group-structured neural latent modeling, diffusion-based probing utilities, and minimal, reproducible examples for shared and synthetic datasets. A concise web explainer will present interactive probe sliders and side-by-side visualizations of latent-group manipulations to make findings accessible to non-specialists.