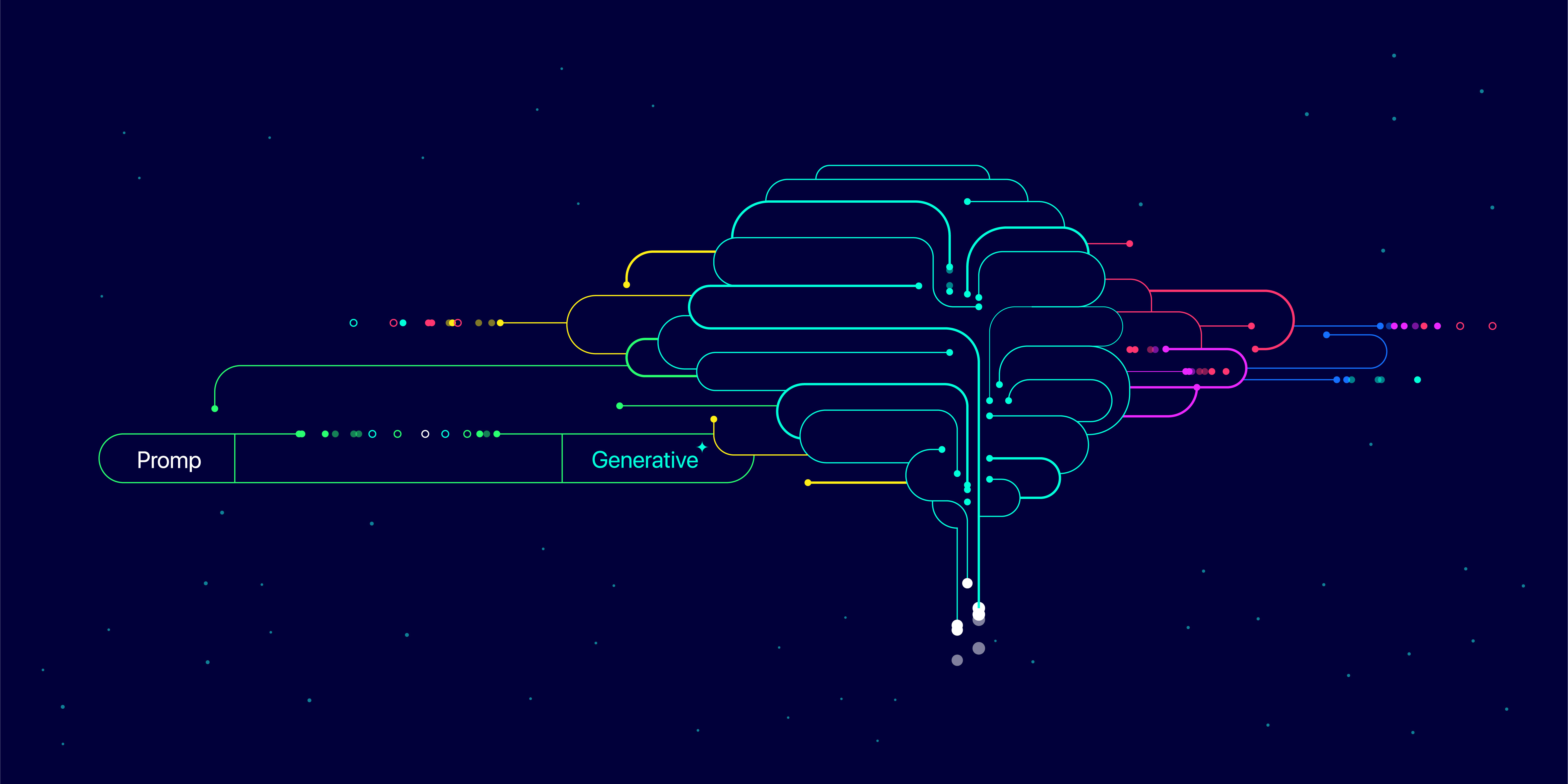

Why It's Important: Large Language Models (LLMs) like ChatGPT have shown remarkable abilities in various tasks, but ensuring they align with human values remains challenging. Our research aims to develop innovative methods for better aligning LLMs with human intent, making them more helpful, safer, and trustworthy across diverse applications.

Current alignment methods often struggle to capture the nuanced, context-dependent nature of human preferences and face challenges with uncertain or biased feedback. Our project addresses these limitations through multi-objective reward modeling, uncertainty-driven reward calibration, and robust handling of potentially corrupted feedback.

Our Approach: We're introducing several key innovations: a context-aware system that dynamically balances multiple objectives, an adaptive approach to model preference strengths in human feedback, and techniques to identify and mitigate the impact of inconsistent guidance. These methods are designed to enhance existing LLM training approaches, improving their ability to align with human values.

By improving LLM alignment, our research could enable more tailored AI assistance, guide better code generation, help combat misinformation, and adapt LLMs for specialized scientific fields. Ultimately, we aim to make LLMs more trustworthy, interpretable, and adaptable, fostering their responsible deployment across a wide range of applications.